commit c0f603c (2025-10-20 17:32:51 -0400) Torsten Scholak: Simplify JSON instances and refactor publication fields with type-safe GADTs

The Home Supercomputer Fallacy

Tagged as: gpu infrastructure economics

Posted on Oct 15, 2025

7 min read

NVIDIA's DGX Spark promises to put an "AI supercomputer" on your desk. But for most people, owning a $4,000 box is slower, less flexible, and more expensive than renting smartly. Here's why.

The DGX Spark Hype

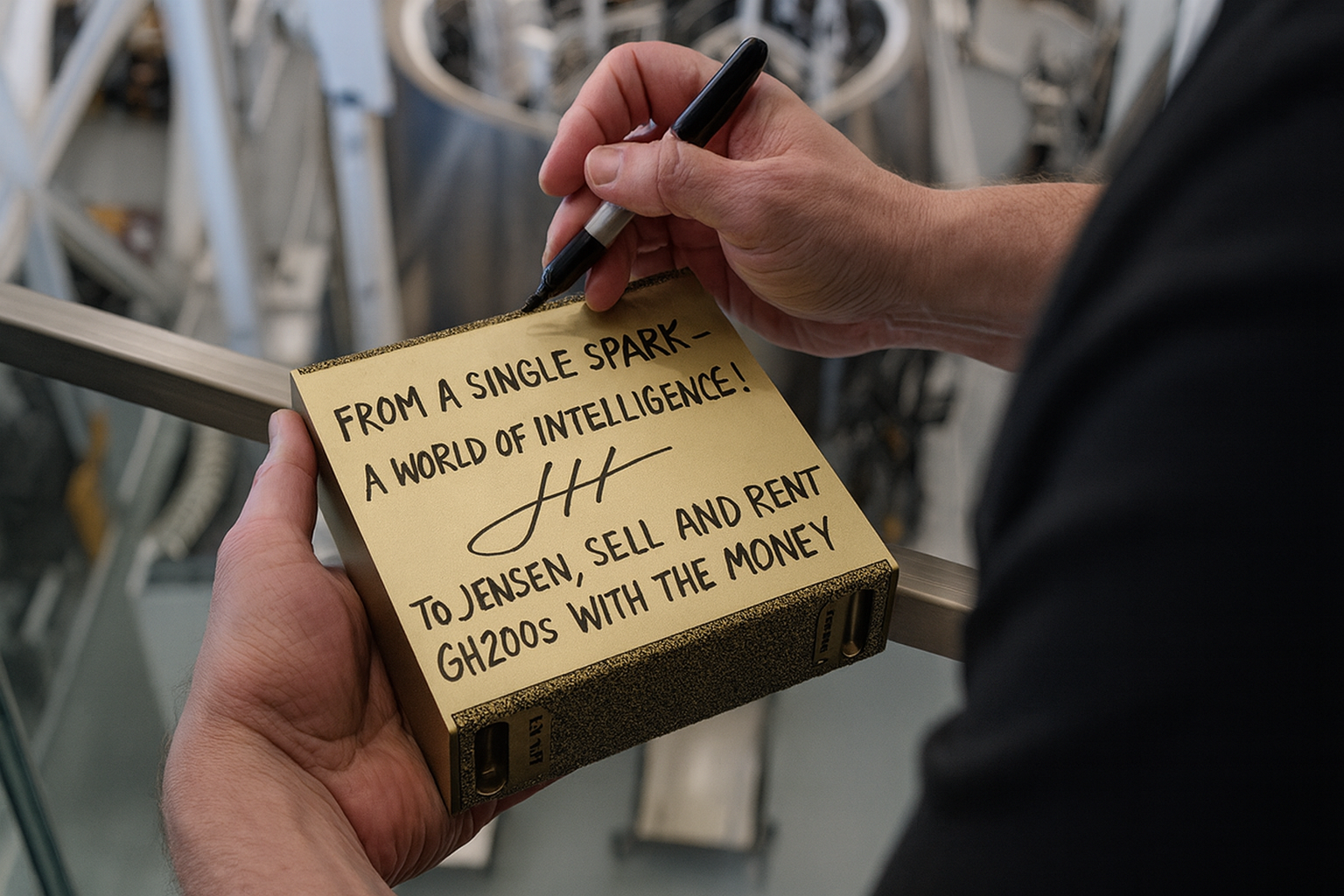

NVIDIA just released the DGX Spark, a $3,999 "AI supercomputer for your desk" the size of a Mac Mini. It ships with 128 GB of unified memory, a Blackwell GPU, and marketing that borders on poetry:

"Own your compute!"

"Escape cloud vendor lock-in!"

"Run the largest models yourself at home!"

Influencers are already unboxing and calling it a game-changer for "taking back control" from the cloud.

Here's the problem: it's slow for real work. Worse, the whole premise is a trap because you can get better performance, zero idle cost, and a persistent environment elsewhere for less money.

The Spark's Actual Performance

Running GPT-OSS 20B in Ollama (via @LMSYS):

| Hardware / Model | Precision | Prefill (tokens/sec) | Decode (tokens/sec) | Relative Speed vs Spark | Notes |

|---|---|---|---|---|---|

| DGX Spark (GB10) | 4-bit (mxfp4) | 2,054 | 49.7 | 1x | 128 GB LPDDR5X @ 273 GB/s unified memory |

| RTX 6000 Blackwell | 4-bit (mxfp4) | 10,108 | 215 | 4.3x faster | Workstation GPU with ~1 TB/s bandwidth |

| GeForce RTX 5090 | 4-bit (mxfp4) | 8,519 | 205 | 4.0x faster | Consumer flagship GPU |

| GH200 (Cloud, est.) | FP8 / FP16 | ~10,000-15,000 | ~250-350 | ~5-7x faster | 96 GB HBM3 @ 4 TB/s; $1.49/hr on Lambda |

The Spark is 4x slower than high-end GPUs on standard workloads. In SGLang, for a 70B model (FP8), it manages 2.7 tokens per second in generation. Not exactly "supercomputer" speed.

The bottleneck is the memory bandwidth, not the GPU cores. The Spark's 273 GB/s LPDDR5x sounds impressive until you realize an RTX 5090 has ~1 TB/s. The precious Blackwell GPU cores are starved for data. NVIDIA clearly traded bandwidth for price; cutting LPDDR5X instead of HBM keeps it under $4k and avoids cannibalizing the $8.5k RTX 6000.

The NVFP4 Trap

NVIDIA's marketing claims "1 petaFLOP" of performance or "1000 AI TFLOPS." Technically true, but only for NVFP4, which is NVIDIA's new proprietary 4-bit floating-point format that almost no models use yet.

Load a standard model and the Spark essentially behaves like a mid-range GPU with relatively weak inference performance. You're buying hardware optimized for a format the ecosystem hasn't adopted yet.

Want the Spark's advertised performance? You need models specifically trained or converted to NVFP4. Possible, but not convenient.

The False Binary

The debate has calcified into two positions: buy expensive hardware vs rent expensive cloud.

That's the wrong framing entirely. The real question isn't ownership vs rental because granularity of commitment matters.

What people actually need is:

- Burst access to serious compute (H100, GH200, 8xA100) when working on big models.

- Zero cost when idle.

- Persistent environment between sessions.

The Spark gives you one or two of these at $4,000 upfront. AWS gives

you two of three at premium pricing.

Lambda and other neoclouds give you all three for about

as low as $1.49/hour for a GH200 on demand with no upfront cost or

commitment.

Break-even napkin math:

- $3,999 ÷ $1.49/hr = 2,685 hours

- At 8 hrs/week: 6.45 years of full utilization

- At 8 hrs/day: 336 days of full utilization

This assumes your $4k Spark matches GH200 performance (it doesn't) and the Spark has zero power/cooling costs (it does).

If you are hitting 60%+ duty cycle every day training models or doing heavy inference, you're not doing enthusiast experiments anymore but production workloads. You need a big rig or a cluster, not a Spark.

The Real Bottleneck is Ceremony

While renting compute on neoclouds is cheap and easy, the workflow friction is the real barrier:

$ launch instance

... wait several minutes

... "is it up already?"

... ssh in, install everything from scratch

... "wait, how did I get FlashAttention working with this version of PyTorch again?"

... "shit, I forgot to mount my data"

... "where's that experiment config file? did I save it?"

... do 30 minutes of work

... terminate instance

... lose everythingSo in the end you default to your laptop and don't do any of the real work you intended to do.

Declarative Ephemeral Infrastructure

Simple fix: persistent storage + declarative environment + intelligent retry.

devbox command=up_gh200(devbox is a tool I wrote to manage ephemeral GPU instances, link at end)

This launches a GH200 instance with:

- Your entire

/homedirectory on persistent NFS - Nix store on a 100GB loop device (survives termination)

- SSH config auto-generated

- Exponential backoff on capacity errors

Terminate when done. Environment persists. Next launch: instant. Same packages, same state.

Common Objections

"But M4/M5 Macs are faster!" Yep. For models under 30B, an M4 Mac matches or beats the Spark and doubles as a general-purpose machine. The Spark's 128GB advantage matters for massive models, except: a 120B model runs at ~14-15 tokens/sec on Spark vs ~53 tokens/sec on an M2 Max Mac Studio.

"What about AMD Strix Halo?" Faster on many workloads, roughly half the price. Trade-off: you lose the mature CUDA ecosystem. (Well, ROCm is getting better, but the compute capabilities of a Max+ 395 are not the same as a Blackwell.) Pure inference? Strix wins. Development and scaling? Spark's NVIDIA stack matters.

Local data access? On first launch you set up your dotfiles, pull any repos you need, and set up development environments with nix or uv. After that, everything is on an NFS mount. No difference from local disk from then on. Persistent storage is dirt cheap.

Latency? SSH latency is ~20-50ms. Irrelevant for training/inference.

Vendor lock-in? Lambda's API is just HTTP. Migrating to another provider is a weekend project if needed.

Capacity availability? GH200s are scarce. Retry logic handles it. In practice: occasional 10-20min waits for capacity, then instant access, followed by many hours of uninterrupted work. That overhead is a rounding error compared to the cost of owning hardware.

Conclusion

The DGX Spark exists because "AI supercomputer for your home office" sells better than "expensive, slow, vendor-locked bet on NVFP4." NVIDIA admits the Spark isn't designed for raw performance but as a "developer-friendly platform" for people prototyping before scaling to real DGX systems or cloud. It's also a $4k stepping stone to lock you further into the DGX ecosystem. The "own your infrastructure" narrative is seductive, but all the brilliant marketing can't hide the fact that for many people this is a terrible purchase and renting is simply better.

The Spark conflates ownership with commitment. You can own your data, environment, and state without owning idle silicon. Renting ephemeral GPUs gives you better performance, zero idle cost, and the same persistence.

Don't buy the "home supercomputer." Rent smart. Work smart.

Disclaimer: I have no relationship with Lambda Labs or NVIDIA. I just do a lot of training framework development and can afford to be opinionated about infrastructure.

Disclaimer 2: The author of this article has since purchased a DGX Spark because he thinks it looks cool and makes him look smart at parties. Just kidding.

Next Steps

If you want to try this out, check out devbox, my little opinionated tool to manage ephemeral GPU instances easily. Drop me a line if you have questions or feedback!