Apriel-H1

Why efficiency-optimized reasoning matters now

Torsten Scholak — Lead Research Scientist

SLAM Lab — ServiceNow

November 2025

Efficiency = Capability

Apriel matches frontier reasoning at 15B.

But full attention pays the quadratic tax → long ctx throughput is now

the bottleneck.

Speed creates capability:

- Agents keep full tickets/logs in memory → fewer compactions

- More tools per turn at same latency

- Deeper reasoning chains with more steps → better accuracy

- Larger RAG contexts stay in-context

- Higher req/s on existing fleet → lower unit cost, better UX

That's why we're building efficient hybrids.

How Hybrids Work

| Full Attention | Efficient (Linear/Sparse) | Hybrid | |

|---|---|---|---|

| Complexity | O(n²) | O(n) or sub-quadratic | Mixed |

| KV cache | Large, grows with n² | Small or none | Reduced ~50-75% |

| Global fidelity | Perfect | Limited | Preserved in key layers |

| Throughput gain | 1× | 2-10× (but quality risk) | 2-10× at minimal Δ |

Pattern: Keep ~20-30% full attention for global

reasoning,

replace rest with Mamba/linear/sparse mechanisms.

Hybrids Are Shipping at Scale

Apr: NVIDIA Nemotron-H-47B

9:1 Mamba-2:FA hybrid, ≈3× faster vs dense 70B at long ctxMay: Falcon-H1-34B

Parallel Mamba-2 + FA hybrid, 4× prefill, 8× decode at long ctxJun: MiniMax-M1

7:1 Lightning:FA hybrid, ≈3–4× faster decode @100k tokensAug: Nemotron-Nano-9B-v2

7:1 Mamba-2:FA hybrid, up to 6× throughput vs Qwen3-8BSep: Qwen3-Next-80B-A3B

3:1 Gated-DeltaNet:FA hybrid, >10× throughput vs Qwen3-32B @>32kSep: DeepSeek V3.2-Exp

MLA+DSA sparse, 1:64 attended:total tokens @128k, 3× faster at long ctxOct: Kimi-Linear-48B-A3B

3:1 KLA:FA hybrid, 75% KV↓, up to 6× decode @1M

Apriel-H1

What You Get

Today we release Apriel-H1:

- Hybrid reasoner distilled from Apriel-15B

- ~2× throughput in vLLM with minimal quality deltas

- Runs today in vLLM

Proof It Works

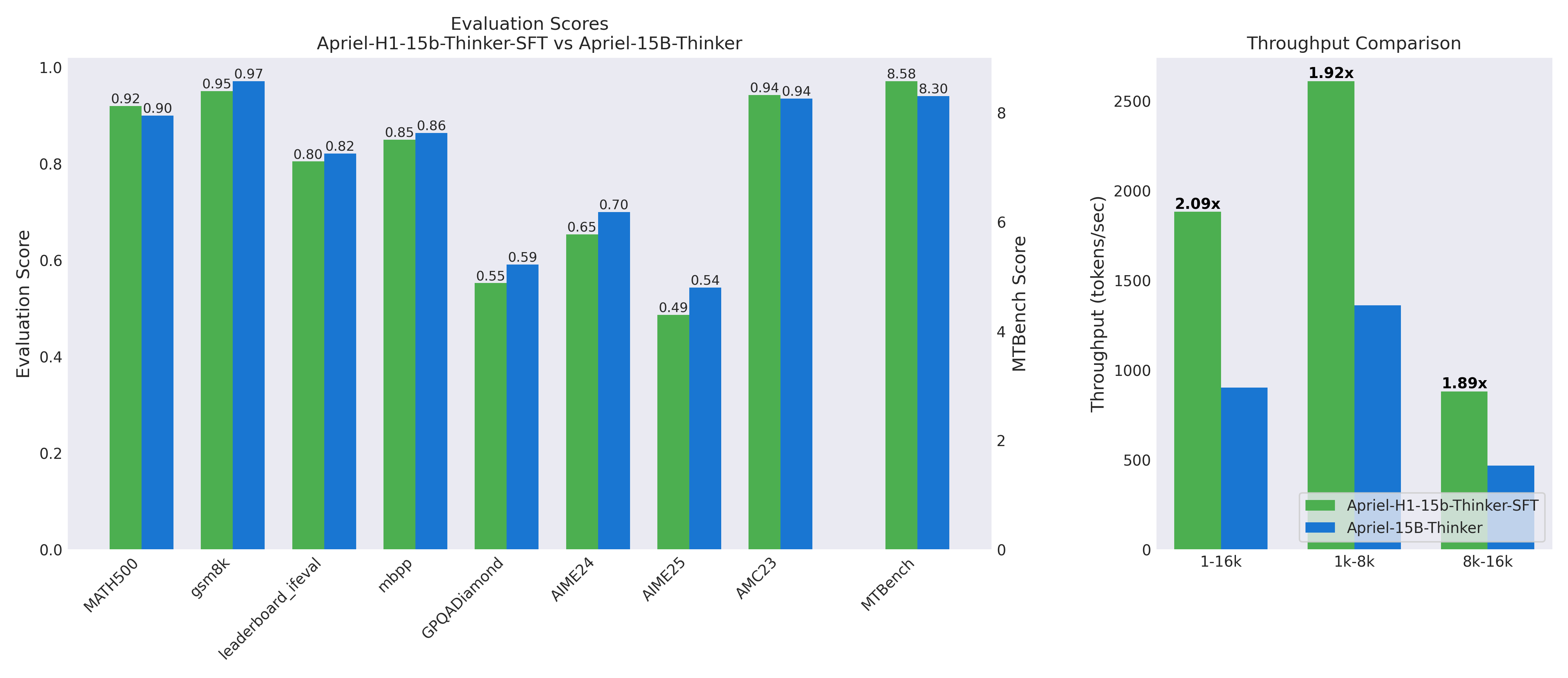

| Metric | Apriel 15B | Apriel-H1 30 | Δ |

|---|---|---|---|

| Throughput (vLLM) | 1× | ~2× | +2× |

| MATH500 | 90 | 92 | +2 |

| GSM8k | 97 | 95 | −2 |

| AIME'24 | 70 | 65 | −5 |

| GPQA-D | 59 | 55 | −4 |

| MBPP | 86 | 85 | −1 |

| MT-Bench | 8.30 | 8.58 | +0.28 |

Evaluation Results

How Apriel-H1 Works

Architecture — H1-30

- Start: Apriel-15B teacher (50 FA layers)

- Replace least-critical FA layers with Mamba (no KV cache, linear time)

- Keep 20 FA layers to preserve global patterns

Distillation — 3 steps

- Score layer importance (LOO perf drop + MMR distill loss)

- Swap low-importance FA → Mamba (MIL-style init from attention)

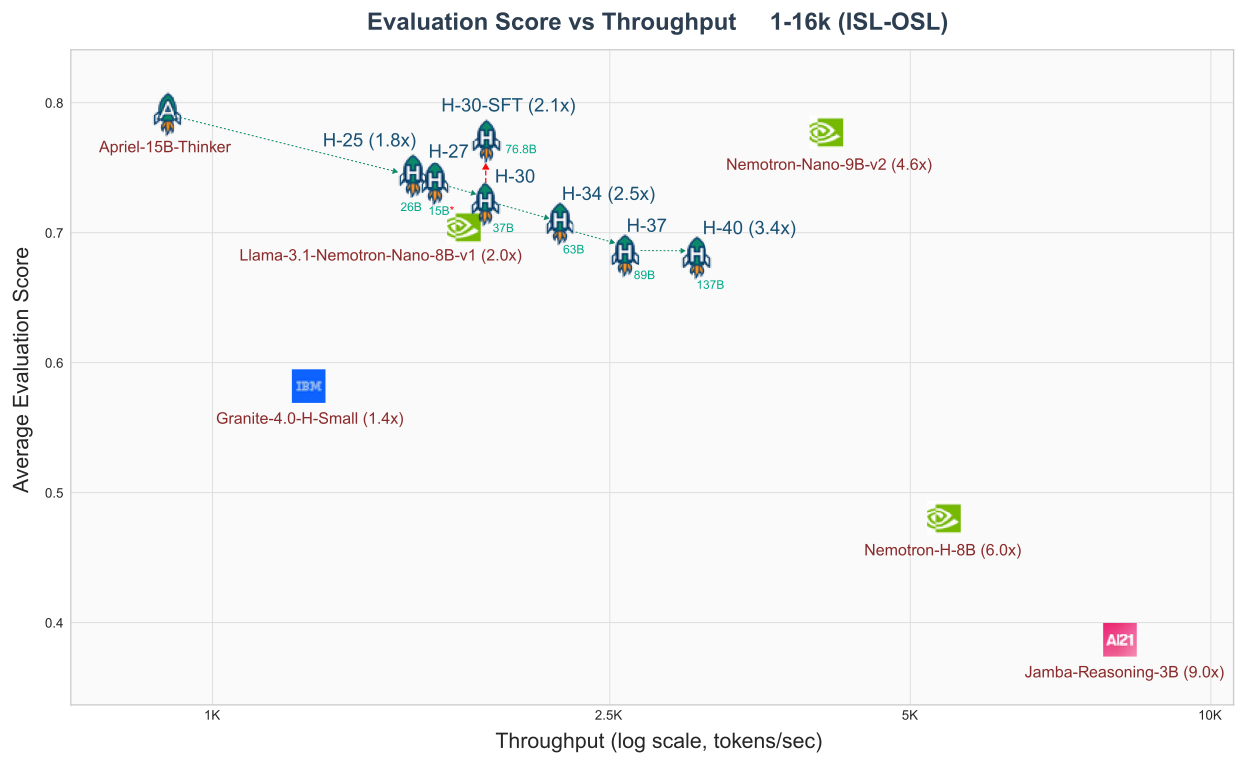

- Stage & gate: H1-25 → H1-27 → H1-30 (… H1-34/37/40) with reverse-KL; ship at best quality/throughput trade

Teacher (50L): [FA][FA][FA][FA][FA][FA][FA][FA][FA][FA] ...

H1-30: [FA][FA][FA][M ][FA][M ][M ][M ][M ][FA] ...

^ ^ ^ ^

"keep" "convert" "convert" "keep"Eval Score vs Throughput

What's Next

Apriel-H2 roadmap:

- Advanced mixers: Gated DeltaNet, Kimi Linear Attention

- Higher efficient-to-full ratios: Search-guided layer placement

- Stacked optimizations: Sliding window + quantization + sparse attention

- Target: 5-10× throughput while maintaining reasoning quality

The path forward:

- From-scratch hybrid training gives the best ceiling

- Distillation offers practical retrofitting for existing models

- Both approaches matter for different constraints

Thank You

Apriel-H1: Efficient Reasoning Through Hybrid Architectures

SLAM Lab — ServiceNow

Contact: Torsten Scholak (torsten.scholak@servicenow.com)

Team: Oleksiy Ostapenko, Luke Kumar, Raymond Li, Denis Kocetkov, Joel Lamy-Poirier